UAR Head of Policy and Media Chris Magee on how scientific consensus emerges from a sea of papers

As part of my job I have to keep a close eye on the media, and so it came to pass that I found myself in the comments section of a well-known national news source, where compassion famously comes to die, reading about the improbable properties of a new ‘superfood’. One of the comments was from a gentleman who wondered why scientists kept finding alternately that certain foods and drinks were good for you, then bad for you, then good for you again.

There are of course a number of reasons this seems to be the case. The first is that sometimes, maybe if a scientific paper’s not so good, the authors will want to be getting to the media with some eye-catching claims before the massive flaws in their methodology are exposed.

The second is that some in the media are happy to take a single scientific paper and consider it individually. It doesn’t matter that 100 papers say the Earth is a sphere – this one reckons it’s a square so “Earth found to be square!” is the headline, for this week at least.

The trick didn’t work on me – I could see it was only one study, with a tiny sample size with all of the scientific credibility of Will Ferrell’s ‘tachyon amplifier’ in Land of the Lost. However, it did make me reflect on how using single studies, cherrypicked, is such a common tactic in pseudoscience. Never mind the 100 papers describing the evolution of the eye, this dude’s going to explain why tears are an example of irreducible complexity.

So it is with a certain strain of anti-research activist. I have mentioned the dodgy references of Dr Ray Greek before (an anecdotal study of 6 compounds does not data make), but they’re all at it, those who have decided to rubbish animal research by presenting a stylised view of the science behind it.

And they’ll find plenty to work with. It’s not hard to find a paper which shows animal research failing in a particular specific area, or find a paper with flaws. The thing that’s missing however is the context. Science isn’t generally full of eureka moments. It’s a painfully slow, self-doubting, self-critical process. One paper may suggest that something is the case. Another one may complement this. Slowly, a picture forms.

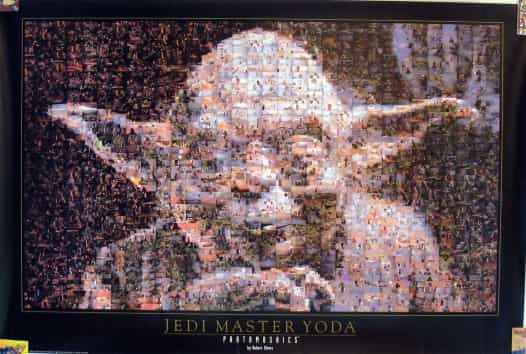

Articulating this in a way that’s more accessible to the layman, it reminded me of Yoda, or rather a Yoda poster my housemates had in university. It was a simpler time in the late 90s, and we could be found of an evening watching this new show called Friends (where the characters made enough waiting tables to rent loft apartments on Manhattan, so definitely pre-recession), or failing to see the hidden image in a ‘magic eye’ poster. We were also thrilled by a new type of mosaic poster where the image was made up of smaller images! Screenshots from the ACTUAL films! What a time to be alive:

But this is kind of how science works too - the bigger picture is made up of lots of smaller studies. It doesn’t matter in the Yoda poster that there are dark screenshots that form the background – there’s still plenty of green to form the meta image.

When we are talking about science, we are describing the emerging picture. When pseudoscientists cherrypick studies, they are picking just the dark tiles, and in my view it’s one of the nastiest tactics they employ because it makes a sucker out of those who believe them. Not understanding the meta nature of scientific consensus is absolutely essential if you’re to fall for the trick. It’s a joke with a victim. It’s a magic trick which actually breaks your watch.

I suppose it could be of some use to do more metastudies on aspects of animal research in order to counter this sort of approach, but it’s so diverse in its nature it will be tricky to do. One could maybe look at the testing of drugs or something like that, which is already researched in toxicology journals, but again there are so many different sorts of compounds, and animals used. But testing substances is hardly much of animal research though I know activists like to pretend it is and you can’t metastudy the efficacy of discovery, which is what most of animal research actually is.

We can’t stop pseudoscientists misleading the public, and we can’t stop the public falling for it if they really want to give in to their confirmation bias. All we can really do is make sure we are explaining both the experiment and its context accurately, encourage this best practise in the media and everywhere else and remember that science isn’t a bunch of ‘eureka moments’ for a handful of scientists, it’s a self-critical Yoda poster created by a cast of thousands.

Last edited: 28 July 2022 08:44